About

While my time is in the future relative to yours, and my Post-Anthropocene world is very different to the world that you inhabit, I have a few thoughts on the development of generative AI in your time and world.

I am not opposed to AI per se. AI models with a specific and well defined purpose, trained on limited, relevant and human selected datasets show enormous promise in applications such as assistive technology communication devices. What I am concerned about is generative AI and specifically LLM (Large Language Models). My concerns regarding these models is based on six main concerns.

1. Environmental threats

The environmental impact if generative AI is substantial and just like all environmental impacts, will first affect, and worst affect, the world’s poorest. The data centres that run these models draw enormous amounts of electricity which requires an uptick in generation. Increasing carbon emissions in order to power a technology that is as energy intensive as AI (and that we can easily live without) seems counterproductive. Drinking water is already under threat from the huge quantities of fresh water required to cool AI data centres, and the increase in carbon emissions generated to power these data centres will affect the developing world most acutely. The increase in mining and consumption of valuable rare earth minerals to produce GPU chips is a drain on finite resources and a source of pollution. Downstream from this, the increased production of toxic e-waste that the development of AI will produce will continue to be literally dumped on those who are furthest from the promised advantages that the technology promises.

2. Intellectual Property threats

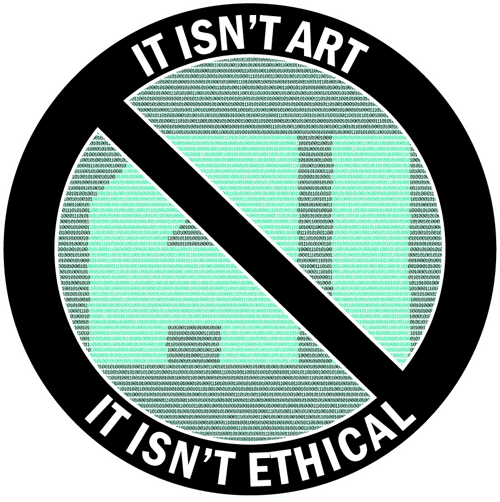

The data harvesting of these models is indiscriminate and has no concept of, let alone respect for, intellectual property, privacy or consent. Visual artists have already seen their work plagiarized and spat out as “new” content to satisfy a prompt and to be resold as stock images. As LLM models mine and scrape data they could eventually analyse all human created content available, enabling any of it to be uncritically and unethically re-contextualized and regurgitated with no thought for the creators rights, needs or wishes. If human creativity incorporates this technology as a matter of course it will undermine the very notion of intellectual property to the point that the concept could become meaningless. This has already been legally tested in court cases determining that “creators” who use AI models in their work may not in fact own the content that they have collaborated with AI to generate.

3. Devaluing human creativity

If human creativity is to incorporate this technology, not only will creators be plagiarizing the work of others with no awareness or empathy, they will be participating in the process of continuing to train the very AI models which have been promoted as assisting them, to ultimately replace them. If creativity and genuine skills are allowed to be replaced by the ability to have an AI model “create” something based on any aspect of the collective human history of creativity for free, or for a low monthly fee, then creative endeavours in general could become economically unviable.

4. Primitive accumulation

The process of harvesting the huge swathes of data that LLMs need in order to be trained is a form of primitive accumulation. Each capitalist system has been preceded by a period of forceful gathering of resources from the majority into the hands of a few. It appears that we are now seeing a new wave of accumulation of the assets of the many into the hands of a few in order to enable the burgeoning frontier of cloud capital. Not only is the data that these models scrape part of this, the data that we enter into these models as prompts and as personal data required for account creation, is too, and it can all be leveraged for profit by the owners of these models. The owners of these models, and many other employers, will not want to employ anyone who can be replaced by this technology. If we allow our intellectual effort to be devalued to the point that synthesized AI content is considered equal to human creativity, we may be entering an era in which not only is the means of production owned and run by a minority at the expense of the majority, but that the mode of production will be owned by the capitalist class also

5. Threats to social cohesion

Generative AI poses similar threats to social cohesion as social media, paired with social media Generative AI threatens to increase the severity of these existing threats exponentially. Social media has long been used to disseminate conspiracy theories, biased information, misinformation and disinformation. AI enables the rapid and widespread production of convincing misinformation in the form of fake articles and reports as well as deep fake images, videos and audio to be generated by anyone. The democratization of deep fakes, and the ability to rapidly disseminate them via social media, has already flooded social media platforms and the internet in general with slop at best and harmful deep fakes at worst. This scenario has already eroded trust in the media and public institutions as well as undermined trust in electoral processes and democracy itself. If biased, inaccurate or patently false information is allowed to continue to flood online platforms, these problems will worsen, and countering such problems will only become harder, as finding trustworthy information and knowing which information is trustworthy will become increasingly more difficult. The big tech institutions have demonstrated that they are not only uninterested in the safety of users or protecting social cohesion, in order to chase market dominance and profit they are actively working against this.

6. potential cognitive atrophy

I fear that outsourcing our intellectual efforts to a machine that seeks patterns, repetition and probability is not only to settle for an alternative that is far our inferior, it is to risk allowing our current cognitive and intellectual abilities to regress. Can we really expect that adopting technologies such as LLMs will have no such negative consequence?

If the consequences of adopting generative AI into workflows and daily life is a media landscape awash with slop and the widespread enshittification of websites, platforms, apps and programs, a devaluing of the concept of human creativity, a lack of ability to use intellectual effort to produce any form of value, an economic system increasingly more exploitative and inhuman and a planet unable to maintain human or any other life, then I struggle to comprehend what the benefits are.

If prevention of the widespread adoption of generative AI is not an option, then the minimum standard of ethical conduct regarding generative AI, and the only ethical action available, is to refuse to have any part in it.

.png?width=300&height=93&name=Title%20(small).png)